Ptolemy and Copernicus: how astronomers can teach us about quantitative rigor

The economist Stephen Keen is quite controversial. He’s stirred some discontent in mainstream economic circles by attacking econometric modeling. He calls these “Ptolematic” approaches to understanding the economy. For those that aren’t antiquity buffs, let me get you up to speed on Ptolemy. He was a 2nd century Roman astronomer, and his main claim to fame was furthering Plato’s geocentric model (earth is the center of the universe). He did this through the application of geometry, not just educated guessing. His work culminated in a model which found that celestial orbits of the sun and the observable planets were perfectly circular around a point a few smidges away from the earth.

Ptolmey did not question the conventional wisdom around geocentricism. Instead, he worked doggedly towards a mathematic fit (rather than a proof) that seemed to generally work. I say generally because it couldn’t account for the unsettling irregularity that we know to be caused by the earth not being the center of the solar system, as well as celestial orbits not being circular. It took almost 1,400 years for Copernicus to come along and repudiate this equant theory of Ptolemy. Copernicus was skeptical of the gradual degradation of Ptolemy’s “model fit”. After several centuries of orbit, Ptolemy’s math wouldn’t check out, and the bodies wouldn’t behave as expected. For the preceding 1,400 years, astronomers and mathematicians thought of this as an anomaly and moved on with their lives.

The point of this isn’t to bore you with astronomy, but to show how beginning with a fundamental flaw (in this case geocentricism vs heliocentrism) can spawn an entire cannon of work that attempts to fit around anomalous behavior. Ptolemy’s equant cycles needed to rationalize why geocentricism and uniform circular motion didn’t quite reconcile. He spent a career doing this, rather than questioning the foundation of the thesis.

Circle back to Steve Keen (pun intended), he equates this to how economists today attempt to explain economic behavior, and why their models seem to consistently miss impending calamity (e.g., financial crises). Keen is famous for predicting the great financial crisis. His analysis focused on the impact of debt accumulation rather than applying microeconomic models to explain macroeconomic conditions. He argues that foundational microeconomic modeling, the orthodoxy, necessitates oversimplification of fundamentals which leads to occasional anomalies. Sound familiar?

Model-Fitting Quants

To rein in the discussion to all-things Epsilon, we started this post with this bit above because we think there is some value to the questioning of first principles before going down a rabbit-hole of complex math and modeling. We especially think it is useful in the field of quantitative finance and statistical modeling. In quant investing, these problems are characterized as curve fitting, or p-hacking regressions.

Practically everybody…overweighs the stuff that can be numbered, because it yields to the statistical techniques they’re taught in academia – Charlie Munger

Let’s do a quick oversimplified review of how quant investing works. Quantitative investors are seeking market anomalies to arbitrage, often through pattern recognition of historical data. To unearth these anomalies, highly trained quants are increasingly using complex methodologies taught in higher academia. There is an arms race around modeling talent, because these anomalies are increasingly elusive. The low hanging fruit has largely been plucked over the last few decades. Studies show that anomaly-based alphas deteriorate over time (Chordia, 2014), so the quant investor is always on a treadmill: running to stay still. To speed up the incubation of hypotheses, machine learning methods have been increasingly applied by quants. These methods abstract away the formulation of intuitive hypotheses in testing for anomalies. The goal is to sink resources into the fulcrum of learning algorithms, and to leverage that work in finding those hidden gems more rapidly.

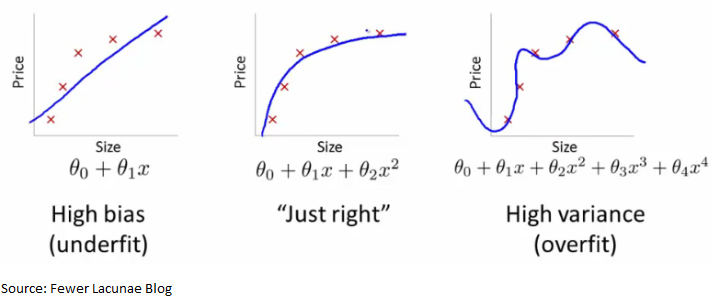

A few glaring problems have been cropping up. The first is the broken clock syndrome, where observable correlations prove to be ephemeral and aren’t replicated in practice. Machine learning, which is otherworldly at finding persistent patterns in strict rule structures (like Chess, or Go) struggle (at least comparatively!) with adaptive markets. This is partly because the art of knowing what’s “just right” versus overfit is often a function of intuition, market experience, and discipline, rather than academic pedigree. A great paper on the subject showed how a group of academics data mined over two million different trading strategies to land on statistically superior outcomes that inevitably were spurious.

The reality is fuzzy academic principles don’t marry well with the messiness of real data. Financial data is notoriously tricky and noisy. It lacks uniformity, as the mathematician Benoit Mandelbrot wrote about. Quant researchers often try to simplify this, as they have been trained in academia, through statistical transformations. These transformations/techniques (e.g., lognormalization, winsorization, residual regressions) attempt to cut out the non-normative characteristics of data to better apply traditional methods to a sanitized distribution.

Foundational thinking based upon assumptions invariably leads to backtests that never truly perform as expected, especially in tail-risk scenarios. This ugly truth is often masked by the self-selection of “valid” signals, which amplifies an echo chamber in the community, or a sense of self-validation. The PnL often speaks otherwise.

The quant researcher must be sober in assessment and critical in thinking. The incentive to find true anomalies that have persistence (the holy grail) leads to conflicting incentives between objectivity and eagerness. Most historical anomalies are arbitraged away, and the base rate must be assumed to be spurious rather than truly abnormal. Practitioners must have a firm respect for the brutal competition of markets, and too many quant investors come from scientific and academic practice where they never dealt with this harsh reality. They must take Copernicus’ approach of questioning foundational beliefs before building layers of complexity.

Suggested Readings:

Bajgrowicz, Pierre, and Oliver Scallet, 2012, False Discoveries, Persistence Tests, and Transaction Costs.

Chorida, Goyal, and Saretto, 2017, p-hacking: Evidence from two million trading strategies.

Harvey, Campbell R., 2017, The Scientific Outlook in Financial Economics, Presidential Address.

Ioannidis, John, 2005, Why Most Published Research Findings are False.

King, Gary, 1986, How Not to Lie with Statistics: Avoiding Common Mistakes in Quantitative Political Science.

Lo, Andrew., and MacKinlay, 1990, Data-Snooping Biases in Tests of Financial Asset Pricing Models

Nester, Marks R., 1996, An Applied Statistician’s Creed.

The Misbehavior of Markets: A Fractal View of Financial Turbulence. Mandelbrot & Hudson (2006).

—

The information contained on this site was obtained from various sources that Epsilon believes to be reliable, but Epsilon does not guarantee its accuracy or completeness. The information and opinions contained on this site are subject to change without notice.

Neither the information nor any opinion contained on this site constitutes an offer, or a solicitation of an offer, to buy or sell any securities or other financial instruments, including any securities mentioned in any report available on this site.

The information contained on this site has been prepared and circulated for general information only and is not intended to and does not provide a recommendation with respect to any security. The information on this site does not take into account the financial position or particular needs or investment objectives of any individual or entity. Investors must make their own determinations of the appropriateness of an investment strategy and an investment in any particular securities based upon the legal, tax and accounting considerations applicable to such investors and their own investment objectives. Investors are cautioned that statements regarding future prospects may not be realized and that past performance is not necessarily indicative of future performance.